Keen-eyed Asio users may have noticed that Boost 1.42 includes a new example, HTTP Server 4, that shows how to use stackless coroutines in conjunction with asynchronous operations. This follows on from the coroutines I explored in the previous three posts, but with a few improvements. In particular:

- the pesky entry pseudo-keyword is gone; and

- a new fork pseudo-keyword has been added.

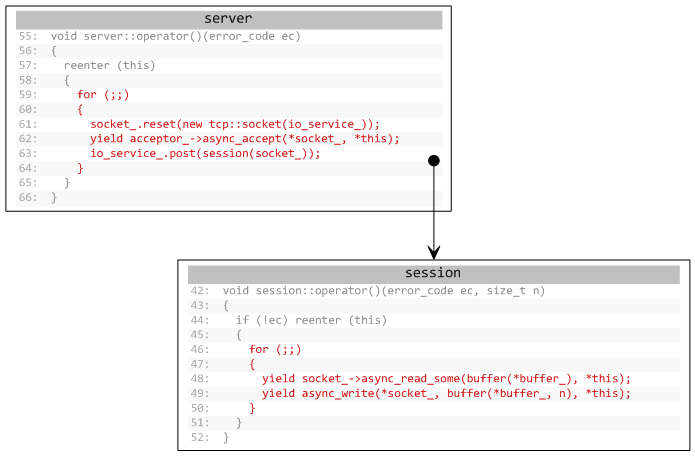

The result bears a passing resemblance to C#'s yield and friends. This post aims to document my stackless coroutine API, but before launching into a long and wordy explanation, here's a little picture to liven things up:

[ Click image for full size. This image was generated from this source code using asioviz. ]

class coroutine

Every coroutine needs to store its current state somewhere. For that we have a class called coroutine:

class coroutine

{

public:

coroutine();

bool is_child() const;

bool is_parent() const;

bool is_complete() const;

};

Coroutines are copy-constructible and assignable, and the space overhead is a single int. They can be used as a base class:

class session : coroutine

{

...

};

or as a data member:

class session

{

...

coroutine coro_;

};

or even bound in as a function argument using bind() (see previous post). It doesn't really matter as long as you maintain a copy of the object for as long as you want to keep the coroutine alive.

reenter

The reenter macro is used to define the body of a coroutine. It takes a single argument: a pointer or reference to a coroutine object. For example, if the base class is a coroutine object you may write:

reenter (this)

{

... coroutine body ...

}

and if a data member or other variable you can write:

reenter (coro_)

{

... coroutine body ...

}

When reenter is executed at runtime, control jumps to the location of the last yield or fork.

The coroutine body may also be a single statement. This lets you save a few keystrokes by writing things like:

reenter (this) for (;;)

{

...

}

Limitation: The reenter macro is implemented using a switch. This means that you must take care when using local variables within the coroutine body. The local variable is not allowed in a position where reentering the coroutine could bypass the variable definition.

yield statement

This form of the yield keyword is often used with asynchronous operations:

yield socket_->async_read_some(buffer(*buffer_), *this);

This divides into four logical steps:

- yield saves the current state of the coroutine.

- The statement initiates the asynchronous operation.

- The resume point is defined immediately following the statement.

- Control is transferred to the end of the coroutine body.

When the asynchronous operation completes, the function object is invoked and reenter causes control to transfer to the resume point. It is important to remember to carry the coroutine state forward with the asynchronous operation. In the above snippet, the current class is a function object object with a coroutine object as base class or data member.

The statement may also be a compound statement, and this permits us to define local variables with limited scope:

yield

{

mutable_buffers_1 b = buffer(*buffer_);

socket_->async_read_some(b, *this);

}

yield return expression ;

This form of yield is often used in generators or coroutine-based parsers. For example, the function object:

struct interleave : coroutine

{

istream& is1;

istream& is2;

char operator()(char c)

{

reenter (this) for (;;)

{

yield return is1.get();

yield return is2.get();

}

}

};

defines a trivial coroutine that interleaves the characters from two input streams.

This type of yield divides into three logical steps:

- yield saves the current state of the coroutine.

- The resume point is defined immediately following the semicolon.

- The value of the expression is returned from the function.

yield ;

This form of yield is equivalent to the following steps:

- yield saves the current state of the coroutine.

- The resume point is defined immediately following the semicolon.

- Control is transferred to the end of the coroutine body.

This form might be applied when coroutines are used for cooperative threading and scheduling is explicitly managed. For example:

struct task : coroutine

{

...

void operator()()

{

reenter (this)

{

while (... not finished ...)

{

... do something ...

yield;

... do some more ...

yield;

}

}

}

...

};

...

task t1, t2;

for (;;)

{

t1();

t2();

}

yield break ;

The final form of yield is adopted from C# and is used to explicitly terminate the coroutine. This form is comprised of two steps:

- yield sets the coroutine state to indicate termination.

- Control is transferred to the end of the coroutine body.

Note that a coroutine may also be implicitly terminated if the coroutine body is exited without a yield, e.g. by return, throw or by running to the end of the body.

fork statement ;

The fork pseudo-keyword is used when "forking" a coroutine, i.e. splitting it into two (or more) copies. One use of fork is in a server, where a new coroutine is created to handle each client connection:

reenter (this)

{

do

{

socket_.reset(new tcp::socket(io_service_));

yield acceptor->async_accept(*socket_, *this);

fork server(*this)();

} while (is_parent());

... client-specific handling follows ...

}

The logical steps involved in a fork are:

- fork saves the current state of the coroutine.

- The statement creates a copy of the coroutine and either executes it immediately or schedules it for later execution.

- The resume point is defined immediately following the semicolon.

- For the "parent", control immediately continues from the next line.

The functions is_parent() and is_child() can be used to differentiate between parent and child. You would use these functions to alter subsequent control flow.

Note that fork doesn't do the actual forking by itself. It is your responsibility to write the statement so that it creates a clone of the coroutine and calls it. The clone can be called immediately, as above, or scheduled for delayed execution using something like io_service::post().